When I started working at Scaleway, since 2 years now, I’ve figured out, how it can be funny and interesting to self-host a lot of common services.

The first service that I’ve self-hosted is my email boxes. I was never a big fan of big email/messaging apps, like Gmail or Outlook, as you don’t really know what’s going on with your data, and privacy… I’ve installed Mailcow, and since this day, I’m pretty satisfied with it.

Now, the most important thing is to back up your data, specially as your emails can be critical in some cases (administration, office and schools emails). By default, Mailcow is offering a simple script to export specific datas, etc.

Prerequisite:

-have an active Mailcow installation

-have an active Scaleway account or any S3 provider

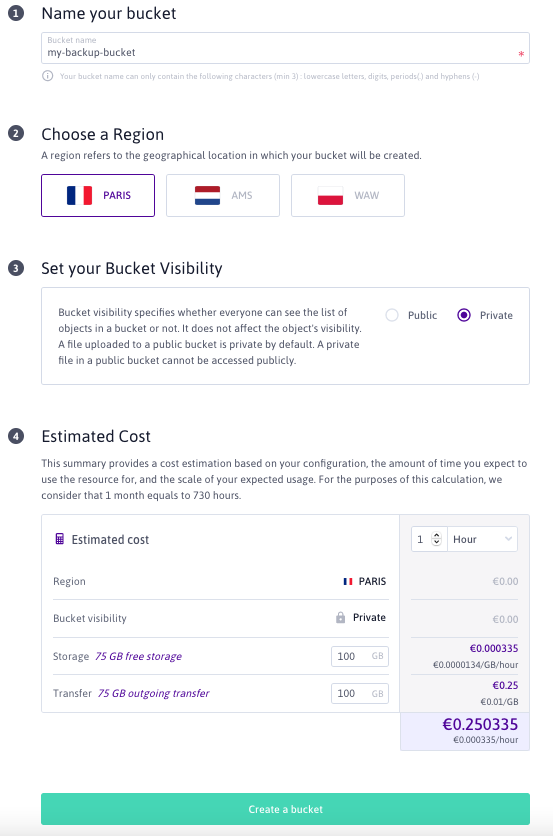

Step 1: Create your bucket with your S3 storage provider

As Scaleway is offering a lifetime free tier for their Object Storage service, I will use Scaleway in this documentation. Here, choose your name, your region, and your bucket visibility.

You will be able to see your cost estimator, but as reminded, 75GB of storage is free, and 75GB of outgoing transfer are also free.

Step 2: Install and configure Rclone

Now, let’s install Rclone which will allow us to transfer remotely our backups to our S3 buckets.

wget https://downloads.rclone.org/rclone-current-linux-amd64.zip

apt install zip

unzip rclone-current-linux-amd64.zip

cd rclone*linux-amd64/

mv rclone /usr/bin/

Now, Rclone is installed, and let’s configure it.

rclone configAnd you will be asked for a new configuration:

2021/04/09 18:01:21 NOTICE: Config file "/root/.config/rclone/rclone.conf" not found - using defaults

No remotes found - make a new one

n) New remote

s) Set configuration password

q) Quit configThen, type “n”. And next, select a name, here I will choose “backup-scw”, and click enter.

n/s/q> n

name> backup-scwNow, you will be asked for the storage type/provider:

Type of storage to configure.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / 1Fichier

\ "fichier"

2 / Alias for an existing remote

\ "alias"

3 / Amazon Drive

\ "amazon cloud drive"

4 / Amazon S3 Compliant Storage Provider (AWS, Alibaba, Ceph, Digital Ocean, Dreamhost, IBM COS, Minio, Tencent COS, etc)

\ "s3"

5 / Backblaze B2

\ "b2"

6 / Box

\ "box"

7 / Cache a remote

\ "cache"

8 / Citrix Sharefile

\ "sharefile"

9 / Dropbox

\ "dropbox"

10 / Encrypt/Decrypt a remote

\ "crypt"

11 / FTP Connection

\ "ftp"

12 / Google Cloud Storage (this is not Google Drive)

\ "google cloud storage"

13 / Google Drive

\ "drive"

14 / Google Photos

\ "google photos"

15 / Hubic

\ "hubic"

16 / In memory object storage system.

\ "memory"

17 / Jottacloud

\ "jottacloud"

18 / Koofr

\ "koofr"

19 / Local Disk

\ "local"

20 / Mail.ru Cloud

\ "mailru"

21 / Mega

\ "mega"

22 / Microsoft Azure Blob Storage

\ "azureblob"

23 / Microsoft OneDrive

\ "onedrive"

24 / OpenDrive

\ "opendrive"

25 / OpenStack Swift (Rackspace Cloud Files, Memset Memstore, OVH)

\ "swift"

26 / Pcloud

\ "pcloud"

27 / Put.io

\ "putio"

28 / QingCloud Object Storage

\ "qingstor"

29 / SSH/SFTP Connection

\ "sftp"

30 / Sugarsync

\ "sugarsync"

31 / Tardigrade Decentralized Cloud Storage

\ "tardigrade"

32 / Transparently chunk/split large files

\ "chunker"

33 / Union merges the contents of several upstream fs

\ "union"

34 / Webdav

\ "webdav"

35 / Yandex Disk

\ "yandex"

36 / http Connection

\ "http"

37 / premiumize.me

\ "premiumizeme"

38 / seafile

\ "seafile"

Storage>Here, let’s choose “s3”, as we will use a compatible S3 provider. And let’s chosse the right one!

Choose your S3 provider.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / Amazon Web Services (AWS) S3

\ "AWS"

2 / Alibaba Cloud Object Storage System (OSS) formerly Aliyun

\ "Alibaba"

3 / Ceph Object Storage

\ "Ceph"

4 / Digital Ocean Spaces

\ "DigitalOcean"

5 / Dreamhost DreamObjects

\ "Dreamhost"

6 / IBM COS S3

\ "IBMCOS"

7 / Minio Object Storage

\ "Minio"

8 / Netease Object Storage (NOS)

\ "Netease"

9 / Scaleway Object Storage

\ "Scaleway"

10 / StackPath Object Storage

\ "StackPath"

11 / Tencent Cloud Object Storage (COS)

\ "TencentCOS"

12 / Wasabi Object Storage

\ "Wasabi"

13 / Any other S3 compatible provider

\ "Other"On my case, I will choose “9” which is Scaleway Object Storage. If you’re using any other S3 provider, type his number, or type 13.

Get AWS credentials from runtime (environment variables or EC2/ECS meta data if no env vars).

Only applies if access_key_id and secret_access_key is blank.

Enter a boolean value (true or false). Press Enter for the default ("false").

Choose a number from below, or type in your own value

1 / Enter AWS credentials in the next step

\ "false"

2 / Get AWS credentials from the environment (env vars or IAM)

\ "true"

env_auth> Now, type false, as we will configure that now.

AWS Access Key ID.

Leave blank for anonymous access or runtime credentials.

Enter a string value. Press Enter for the default ("").

access_key_id>To get your access key, with Scaleway Elements ecosystem, have a look at this documentation, which will explain to you that easily. Paste the access key, and enter.

AWS Secret Access Key (password)

Leave blank for anonymous access or runtime credentials.

Enter a string value. Press Enter for the default ("").

secret_access_key>Now, you will be asked for your Secret Key, that you get on the past step. Just paste it, and enter.

Region to connect to.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / Amsterdam, The Netherlands

\ "nl-ams"

2 / Paris, France

\ "fr-par"

3 / Warsaw, Poland

\ "pl-waw"

region> Here, choose your bucket region, and as my bucket is located in “fr-par”, I will select “2”.

Endpoint for Scaleway Object Storage.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / Amsterdam Endpoint

\ "s3.nl-ams.scw.cloud"

2 / Paris Endpoint

\ "s3.fr-par.scw.cloud"

3 / Warsaw Endpoint

\ "s3.pl-waw.scw.cloud"

endpoint>

Here, I will also choose the Paris endpoint, as my bucket.

Canned ACL used when creating buckets and storing or copying objects.

This ACL is used for creating objects and if bucket_acl isn't set, for creating buckets too.

For more info visit https://docs.aws.amazon.com/AmazonS3/latest/dev/acl-overview.html#canned-acl

Note that this ACL is applied when server side copying objects as S3

doesn't copy the ACL from the source but rather writes a fresh one.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / Owner gets FULL_CONTROL. No one else has access rights (default).

\ "private"

2 / Owner gets FULL_CONTROL. The AllUsers group gets READ access.

\ "public-read"

/ Owner gets FULL_CONTROL. The AllUsers group gets READ and WRITE access.

3 | Granting this on a bucket is generally not recommended.

\ "public-read-write"

4 / Owner gets FULL_CONTROL. The AuthenticatedUsers group gets READ access.

\ "authenticated-read"

/ Object owner gets FULL_CONTROL. Bucket owner gets READ access.

5 | If you specify this canned ACL when creating a bucket, Amazon S3 ignores it.

\ "bucket-owner-read"

/ Both the object owner and the bucket owner get FULL_CONTROL over the object.

6 | If you specify this canned ACL when creating a bucket, Amazon S3 ignores it.

\ "bucket-owner-full-control"

acl> Now, you will be asked for your bucket privacy policy. As backups are strictly personal, I will choose “1” which is “Private”.

The storage class to use when storing new objects in S3.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / Default

\ ""

2 / The Standard class for any upload; suitable for on-demand content like streaming or CDN.

\ "STANDARD"

3 / Archived storage; prices are lower, but it needs to be restored first to be accessed.

\ "GLACIER"

storage_class>Now, we will be asked for the storage type. Here with Scaleway, there is only 2 options, and the best in a recovery plan is to use STANDARD storage class.

Edit advanced config? (y/n)

y) Yes

n) No (default)

y/n>

Type “n”, and you will see the following output:

Remote config

--------------------

[remote-sw-paris]

type = s3 provider = Scaleway env_auth = false access_key_id = # Your access key goes here secret_access_key = # Your secret key goes here region = fr-par endpoint = s3.fr-par.scw.cloud acl = private storage_class = STANDARD ——————– y) Yes this is OK (default) e) Edit this remote d) Delete this remote y/e/d>

Then, you will get again a small recap:

Current remotes:

Name Type

==== ====

backup-scw s3

e) Edit existing remote

n) New remote

d) Delete remote

r) Rename remote

c) Copy remote

s) Set configuration password

q) Quit config

e/n/d/r/c/s/q> qStep 3: Create a basic bash script

First, create the following file on the specific path:

mkdir /opt/scripts && cd /opt/scripts

#Create the scripts folder

nano backup.sh

#Create the script fileOnce you’re on your file “backup.sh”, paste the following content:

MAILCOW_BACKUP_LOCATION=/opt/backup /opt/mailcow-dockerized/helper-scripts/backup_and_restore.sh backup all --delete-days 1

rclone sync /opt/backup backup-scw:my-backup-bucket

# On the rclone sync command replace "backup-scw" by your Rclone profile name

# And replace "my-backup-bucket" by your bucket name.-Please note that you can customize the temporary backup space which is currently /opt/backup, but any other folder could be suitable.

-Also, you can choose to back up “all” (which means all of your mailcow installation), or “mail”, just emails received/sent on all of your inboxes.

-Also, the –delete-days flag will automatically remove folders/backups older than 1 day, and replace the old one, by a new one.

To learn more about Mailcow Backup script settings and flags, have a look at their official documentation: https://mailcow.github.io/mailcow-dockerized-docs/b_n_r_backup/

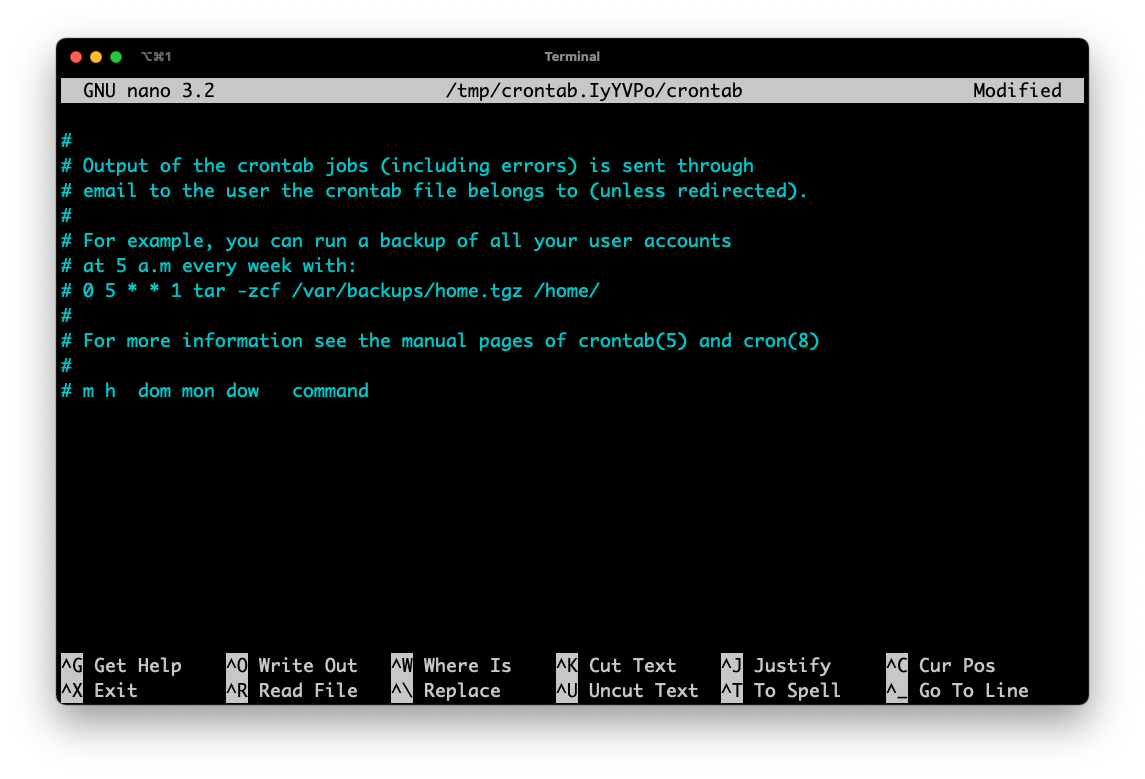

Step 4: Automate your backup process with a crontask

Exit your text editor, and type crontab -e, and you will see the following screen:

Here, on my case, I would like to perform my backup script all days at 0AM, on my local timezone (Paris hour). Then, add this line.

0 0 * * * /opt/scripts/backup.shIf you want to modify days and hour, have a look at this Crontab tool.

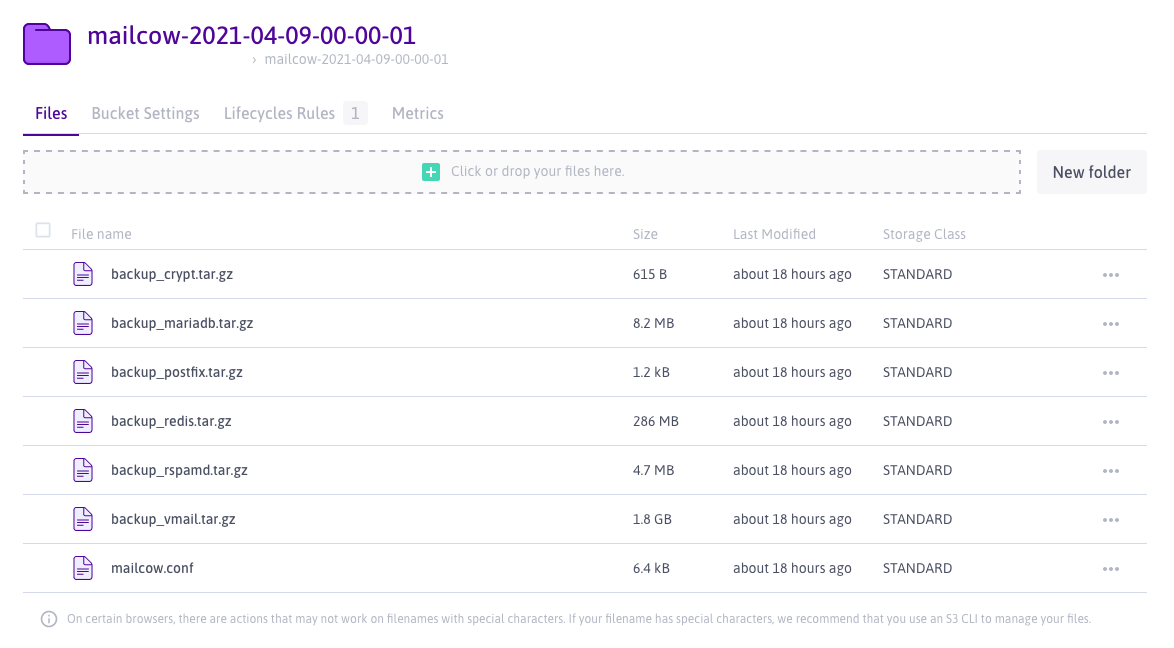

Step 5: Check if your backup is working as expected

To be sure that your backup is working as expected with the Rclone and crontab setup, wait until your crontab execution time, and see if files are still updated, and stored on your bucket.

To get further, and learn how to restore your backup, have a look at the official Mailcow documentation available here.

Congratulations, you’ve successfully learned how to back up your Mailcow installation with S3 bucket!